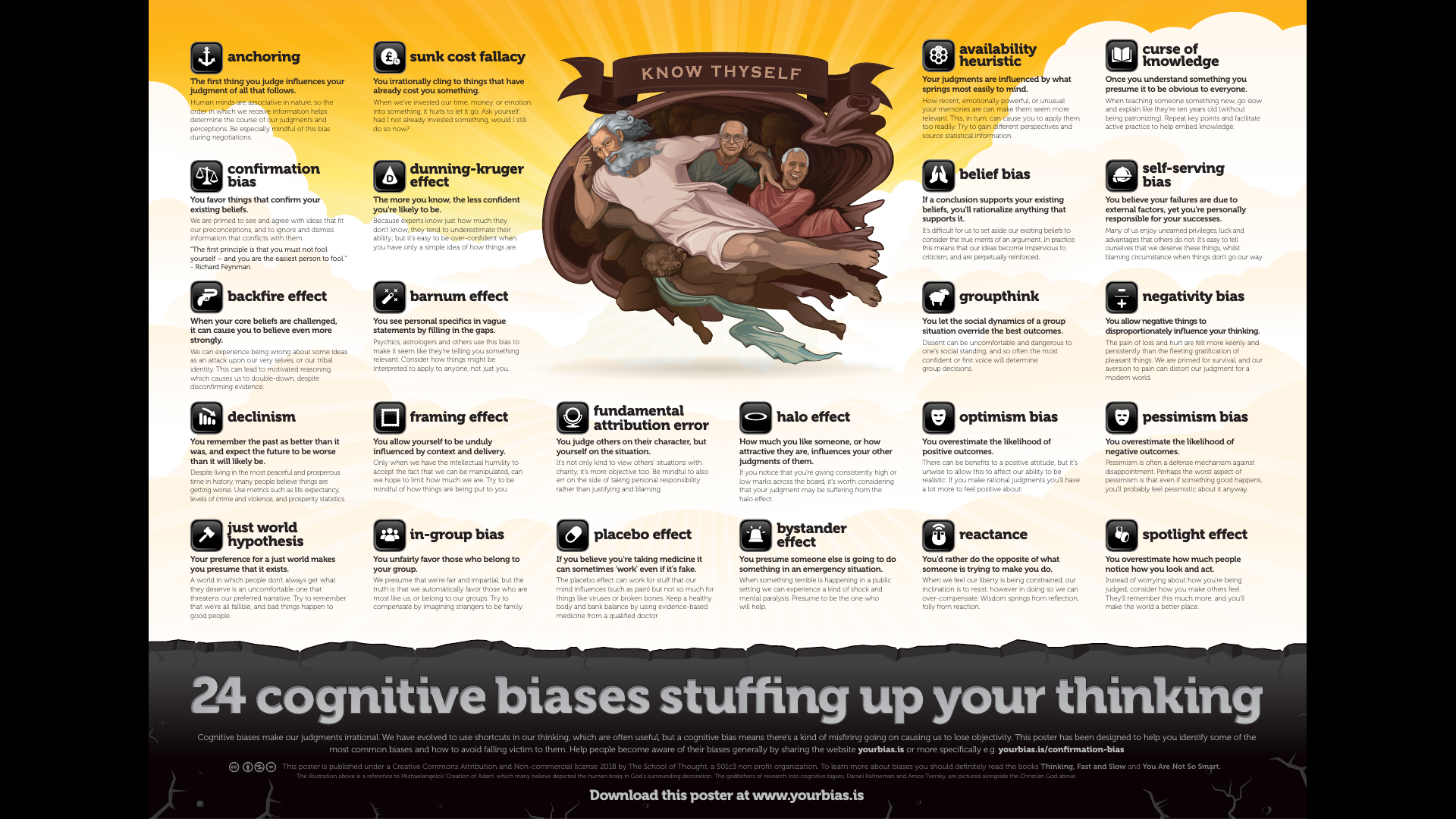

24 most common cognitive biases

School of Thought did an amazing job describing some of the most common cognitive biases in plain English. Study them and improve your decisions.

Table of Contents

Cognitive biases are cases when we consistently see the reality differently than it objectively is.

These biases can interfere with our sense-making and interpretation of what's happening around us. For example, when you are receiving receiving feedback you might be subconsciously filtering or discounting everything that does not align with your self-perception. That would be a form of confirmation bias.

Being aware of different types of cognitive biases is a valuable step towards improving your thinking and decision making muscles. Sometimes recognition of existing bias is enough to dramatically reduce the risk of making an expensive decision error.

School of Thought did an amazing job describing some of the most common cognitive biases in plain English. You can study them on their interactive website, or reproduced together below.

If you'd like to become better at overcoming listed biases I suggest following guide:

Anchoring

The first thing you judge influences your judgment of all that follows. ∞

Human minds are associative in nature, so the order in which we receive information helps determine the course of our judgments and perceptions. For instance, the first price offered for a used car sets an ‘anchor’ price which will influence how reasonable or unreasonable a counter-offer might seem. Even if we feel like an initial price is far too high, it can make a slightly less-than-reasonable offer seem entirely reasonable in contrast to the anchor price.

Be especially mindful of this bias during financial negotiations such as houses, cars, and salaries. The initial price offered has proven to have a significant effect.

The sunk cost fallacy

You irrationally cling to things that have already cost you something. ∞

When we've invested our time, money, or emotion into something, it hurts us to let it go. This aversion to pain can distort our better judgment and cause us to make unwise investments. A sunk cost means that we can't recover it, so it's rational to disregard the cost when evaluating. For instance, if you've spent money on a meal but you only feel like eating half of it, it's irrational to continue to stuff your face just because 'you've already paid for it'; especially considering the fact that you're wasting actual time doing so.

To regain objectivity, ask yourself: had I not already invested something, would I still do so now? What would I counsel a friend to do if they were in the same situation?

The availability heuristic

Your judgments are influenced by what springs most easily to mind. ∞

How recent, emotionally powerful, or unusual your memories are can make them seem more relevant. This, in turn, can cause you to apply them too readily. For instance, when we see news reports about homicides, child abductions, and other terrible crimes it can make us believe that these events are much more common and threatening to us than is actually the case.

Try to gain different perspectives and relevant statistical information rather than relying purely on first judgments and emotive influences.

The curse of knowledge

Once you understand something you presume it to be obvious to everyone. ∞

Things makes sense once they make sense, so it can be hard to remember why they didn't. We build complex networks of understanding and forget how intricate the path to our available knowledge really is. This bias is closely related to the hindsight bias wherein you will tend to believe that an event was predictable all along once it has occurred. We have difficulty reconstructing our own prior mental states of confusion and ignorance once we have clear knowledge.

When teaching someone something new, go slow and explain like they're ten years old (without being patronizing). Repeat key points and facilitate active practice to help embed knowledge.

Confirmation bias

You favor things that confirm your existing beliefs. ∞

We are primed to see and agree with ideas that fit our preconceptions, and to ignore and dismiss information that conflicts with them. You could say that this is the mother of all biases, as it affects so much of our thinking through motivated reasoning. To help counteract its influence we ought to presume ourselves wrong until proven right.

Think of your ideas and beliefs as software you're actively trying to find problems with rather than things to be defended. "The first principle is that you must not fool yourself – and you are the easiest person to fool." - Richard Feynman

The Dunning-Kruger effect

The more you know, the less confident you're likely to be. ∞

Because experts know just how much they don't know, they tend to underestimate their ability; but it's easy to be over-confident when you have only a simple idea of how things are. Try not to mistake the cautiousness of experts as a lack of understanding, nor to give much credence to lay-people who appear confident but have only superficial knowledge.

“The whole problem with the world is that fools and fanatics are so certain of themselves, yet wiser people so full of doubts.” - Bertrand Russell

Belief bias

If a conclusion supports your existing beliefs, you'll rationalize anything that supports it. ∞

It's difficult for us to set aside our existing beliefs to consider the true merits of an argument. In practice this means that our ideas become impervious to criticism, and are perpetually reinforced. Instead of thinking about our beliefs in terms of 'true or false' it's probably better to think of them in terms of probability. For example we might assign a 95%+ chance that thinking in terms of probability will help us think better, and a less than 1% chance that our existing beliefs have no room for any doubt. Thinking probabalistically forces us to evaluate more rationally.

A useful thing to ask is 'when and how did I get this belief?' We tend to automatically defend our ideas without ever really questioning them.

Self-serving bias

You believe your failures are due to external factors, yet you're responsible for your successes. ∞

Many of us enjoy unearned privileges, luck and advantages that others do not. It's easy to tell ourselves that we deserve these things, whilst blaming circumstance when things don't go our way. Our desire to protect and exalt our own egos is a powerful force in our psychology. Fostering humility can help countermand this tendency, whilst also making us nicer humans.

When judging others, be mindful of how this bias interacts with the just-world hypothesis, fundamental attribution error, and the in-group bias.

The backfire effect

When some aspect of your core beliefs is challenged, it can cause you to believe even more strongly. ∞

We can experience being wrong about some ideas as an attack upon our very selves, or our tribal identity. This can lead to motivated reasoning which causes a reinforcement of beliefs, despite disconfirming evidence. Recent research shows that the backfire effect certainly doesn't happen all the time. Most people will accept a correction relating to specific facts, however the backfire effect may reinforce a related or 'parent' belief as people attempt to reconcile a new narrative in their understanding.

“It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” - Mark Twain

The Barnum effect

You see personal specifics in vague statements by filling in the gaps. ∞

Because our minds are given to making connections, it's easy for us to take nebulous statements and find ways to interpret them so that they seem specific and personal. The combination of our egos wanting validation with our strong inclination to see patterns and connections means that when someone is telling us a story about ourselves, we look to find the signal and ignore all the noise.

Psychics, astrologers and others use this bias to make it seem like they're telling you something relevant. Consider how things might be interpreted to apply to anyone, not just you.

Groupthink

You let the social dynamics of a group situation override the best outcomes. ∞

Dissent can be uncomfortable and dangerous to one's social standing, and so often the most confident or first voice will determine group decisions. Because of the Dunning-Kruger effect, the most confident voices are also often the most ignorant.

Rather than openly contradicting others, seek to facilitate objective means of evaluation and critical thinking practices as a group activity.

Negativity bias

You allow negative things to disproportionately influence your thinking. ∞

The pain of loss and hurt are felt more keenly and persistently than the fleeting gratification of pleasant things. We are primed for survival, and our aversion to pain can distort our judgment for a modern world. In an evolutionary context it makes sense for us to be heavily biased to avoid threats, but because this bias affects our judgments in other ways it means we aren't giving enough weight to the positives.

Pro-and-con lists, as well as thinking in terms of probabilities, can help you evaluate things more objectively than relying on a cognitive impression.

Declinism

You remember the past as better than it was, and expect the future to be worse than it will likely be. ∞

Despite living in the most peaceful and prosperous time in history, many people believe things are getting worse. The 24 hour news cycle, with its reporting of overtly negative and violent events, may account for some of this effect. We can also look to the generally optimistic view of the future in the early 20th century as being shifted to a dystopian and apocalyptic expectation after the world wars, and during the cold war. The greatest tragedy of this bias may be that our collective expectation of decline may contribute to a real-world self-fulfilling prophecy.

Instead of relying on nostalgic impressions of how great things used to be, use measurable metrics such as life expectancy, levels of crime and violence, and prosperity statistics.

The framing effect

You allow yourself to be unduly influenced by context and delivery. ∞

We all like to think that we think independently, but the truth is that all of us are, in fact, influenced by delivery, framing and subtle cues. This is why the ad industry is a thing, despite almost everyone believing they’re not affected by advertising messages. The phrasing of how a question is posed, such as for a proposed law being voted on, has been shown to have a significant effect on the outcome.

Only when we have the intellectual humility to accept the fact that we can be manipulated, can we hope to limit how much we are. Try to be mindful of how things are being put to you.

Fundamental attribution error

You judge others on their character, but yourself on the situation. ∞

If you haven’t had a good night’s sleep, you know why you’re being a bit slow; but if you observe someone else being slow you don’t have such knowledge and so you might presume them to just be a slow person. Because of this disparity in knowledge we often overemphasize the influence of circumstance for our own failings, as well as underestimating circumstantial factors to explain other people's problems.

It's not only kind to view others' situations with charity, it's more objective too. Be mindful to also err on the side of taking personal responsibility rather than justifying and blaming.

The halo effect

How much you like someone, or how attractive they are, influences your other judgments of them. ∞

Our judgments are associative and automatic, and so if we want to be objective we need to consciously control for irrelevant influences. This is especially important in a professional setting. Things like attractiveness can unduly influence issues as important as a jury deciding someone's guilt or innocence. If someone is successful or fails in one area, this can also unfairly color our expectations of them in another area.

If you notice that you're giving consistently high or low marks across the board, it's worth considering that your judgment may be suffering from the halo effect.

Optimism bias

You overestimate the likelihood of positive outcomes. ∞

There can be benefits to a positive attitude, but it's unwise to allow such an attitude to adversely affect our ability to make rational judgments (they're not mutually exclusive). Wishful thinking can be a tragic irony insofar as it can create more negative outcomes, such as in the case of problem gambling.

If you make rational, realistic judgments you'll have a lot more to feel positive about.

Pessimism bias

You overestimate the likelihood of negative outcomes. ∞

Pessimism is often a defense mechanism against disappointment, or it can be the result of depression and anxiety disorders. Pessimists often justify their attitude by saying that they'll either be vindicated or pleasantly surprised, however a pessimistic attitude may also limit potential positive outcomes. It should also be noted that pessimism is something very different to skepticism: the latter is a rational approach that seeks to remain impartial, while the former is an expectation of bad outcomes.

Perhaps the worst aspect of pessimism is that even if something good happens, you'll probably feel pessimistic about it anyway.

Just-world hypothesis

Your preference for justice makes you presume it exists. ∞

A world in which people don't always get what they deserve, hard work doesn't always pay off, and injustice happens is an uncomfortable one that threatens our preferred narrative. However, it is also the reality. This bias is often manifest in ideas such as 'what goes around comes around' or an expectation of 'karmic balance', and can also lead to blaming victims of crime and circumstance.

A more just world requires understanding rather than blame. Remember that everyone has their own life story, we’re all fallible, and bad things happen to good people.

In-group bias

You unfairly favor those who belong to your group. ∞

We presume that we're fair and impartial, but the truth is that we automatically favor those who are most like us, or belong to our groups. This blind tribalism has evolved to strengthen social cohesion, however in a modern and multicultural world it can have the opposite effect.

Try to imagine yourself in the position of those in out-groups; whilst also attempting to be dispassionate when judging those who belong to your in-groups.

The placebo effect

If you believe you're taking medicine it can sometimes 'work' even if it's fake. ∞

The placebo effect can work for stuff that our mind influences (such as pain) but not so much for things like viruses or broken bones. Things like the size and color of pills can have an influence on how strong the effect is and may even result in real physiological outcomes. We can also falsely attribute getting better to an inert substance simply because our immune system has fought off an infection i.e. we would have recovered in the same amount of time anyway.

Homeopathy, acupuncture, and many other forms of natural 'medicine' have been proven to be no more effective than placebo. Keep a healthy body and bank balance by using evidence-based medicine from a qualified doctor.

The bystander effect

You presume someone else is going to do something in an emergency situation. ∞

When something terrible is happening in a public setting we can experience a kind of shock and mental paralysis that distracts us from a sense of personal responsibility. The problem is that everyone can experience this sense of deindividuation in a crowd. This same sense of losing our sense of self in a crowd has been linked to violent and anti-social behaviors. Remaining self-aware requires some amount of effortful reflection in group situations.

If there's an emergency situation, presume to be the one who will help or call for help. Be the change you want to see in the world.

Reactance

You'd rather do the opposite of what someone is trying to make you do. ∞

When we feel our liberty is being constrained, our inclination is to resist, however in doing so we can over-compensate. While blind conformity is far from an ideal way to approach things, neither is being a knee-jerk contrarian.

Be careful not to lose objectivity when someone is being coercive/manipulative, or trying to force you do something. Wisdom springs from reflection, folly from reaction.

The spotlight effect

You overestimate how much people notice how you look and act. ∞

Most people are much more concerned about themselves than they are about you. Absent overt prejudices, people generally want to like and get along with you as it gives them validation too. It's healthy to remember that although we're the main character in the story of our own life, everyone else is center-stage in theirs too.

Instead of worrying about how you’re being judged, consider how you make others feel. They'll remember this much more, and you'll make the world a better place.

yourfallacy.is is an initiative of The School of Thought, a 501c3 non profit organization. Support their work by donating and purchasing posters and cards at the Thinking Shop.

Bruno Unfiltered

Subscribe to get the latest posts delivered right to your inbox. No spam. Only Bruno.